I generally thinks these are more of thought experiment problems looking for solutions in a vacuum. They are all most just problems lacking the idea of any sort of new innovation, which is how we got here in the beginning. I suppose that in 2017 I would have said that the concept of LLMs would never exist. I was against most of the NLP world and found it generally useless... but then.. suddenly in 2018.. Transformers... revolutionary and disruptive change. Most of the people I see talking about the potential issues of AI slop ruining future training are generally in the same camp as the over-zealous luddites that look for any random thing to hate on a truly disruptive technology. I suppose we all need our villains

You do realize that AI/ML is directly in my primary field of expertise, correct? I'm talking about the real world impact of model corruption within the commercial world. Testable, observable, repeatable corruption. With AI/ML/LLMs, etc, as well as Python, many companies are losing their taste for the technologies because they are failing to deliver on their promise. (Python has a significantly higher cost of ownership and maintenance than most other common programming languages. It's only disruptive in that it disrupts business operations far more than the other ways of accomplishing the same thing.) The failure of the major AI engines and bolt-on technologies to provide consistent, accurate results is eroding the confidence of the real world users. Businesses are losing the will to bet their success on AI. It's become a marketing term and not much else for the vast majority of the business community. The big players, like Nvidia, Microsoft, Google, Tesla, etc. are pushing the narrative, but they have yet to deliver real results. Maybe that will change with quantum computing...or maybe they will just fail faster. The outcome remains to be seen.

Given the current state of today's technologies... Would you bet your life savings on an AI driven trading program? Would you bet your life on an AI doctor diagnosing a complex set of symptoms and building a treatment plan without oversight by a physician you trusted? Would you close your eyes, sleep and trust an AI car to take you across the country and arrive safely at your destination?

I wouldn't. And I work directly in the heart of the tech industry at the Consulting Senior/Enterprise Architect level. IMO AI is currently at an exciting state of research, but has been rushed into production prematurely. I'm sure there will come a day when it is capable of doing miraculous things. We aren't there yet. We're not even close.

I could take the opposite position from you and say that every new technology has its worshipers who believe that it is the answer to everything. I could also make the argument that every generation thinks they know more than the generation that came before. I can't tell you how many young programmers, dba's, analysts and other technical people I've seen that think that the older generation of technical people are "luddites" (to use your word). What they all seem to have in common is that they fail to realize that nothing is really new. It's the same stuff, repackaged, rebranded and marketed as the next best thing. What do you think was the last truly original concept in technology?

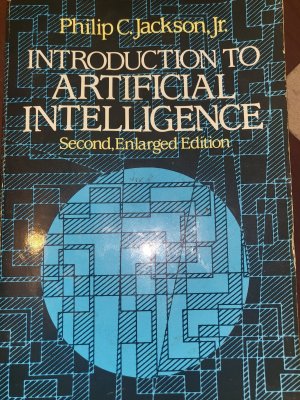

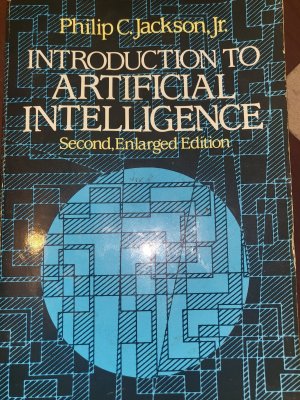

I received my original copy of this book for my birthday when I was in 4th grade in the late 70's. It was written in the 1960's. This one is the reprint from the 1980's (1984? I'm not walking downstairs to look at the flyleaf). Almost everything they are doing today in "AI" is described in detail in this book with accompanying diagrams and supporting math. It's not new and we aren't disregarding it because we don't understand it. We've already tried it, assessed it, and determined where it is useful and where it is not. We know the pros and cons and aren't caught up in the hype. We keep up with the technology, but look at it through the lens of experience. We've incorporated the concepts in our work in a way that is mature and useful. We're not opposed to the

idea we're opposed to the

implementation.

The truth is somewhere in the middle and needs to be analyzed objectively with an eye on the requirements of the specific task. There are components of AI/ML that are very useful and that can and should be incorporated into expert systems, but there are also parts that are so immature as to invalidate the system as a whole when they are included.

It's the old adage, when you're a hammer, every problem is a nail.